Principles and Methodologies for Serial Performance Optimization

System optimization boiled down to eight techniques

Principles and Methodologies for Serial Performance Optimization Sujin Park, Mingyu Guan, Xiang Cheng, and Taesoo Kim OSDI'25

This paper is a psychological trojan horse for computer nerds of a certain vintage. Every paragraph of sections 3 and 4 inflates the ego a bit more. One arrives at section 5 feeling good about their performance optimization skillset, and then one learns that these skills can be replaced by an LLM. A faint hissing sound reaches one’s ears as one’s ego deflates into a shriveled piece of plastic on the ground.

Eight Methodologies

The authors reviewed 477 papers containing specific instances of serial performance optimization and boiled the techniques down into eight categories. Serial is the operative word here: this paper is about optimizing portions of code that cannot be parallelized. However, some of the techniques are applicable in computations that comprise a serial portion (the critical path) and a parallel portion.

Here are the techniques:

Batching

Amortizing a fixed cost over many items. For example, refactoring code so that some computation can be hoisted out of a (per-item) loop.

Caching

Store the results of a computation in memory so that it can be used later on. Memoization is a good example.

Precomputing

Compute something earlier than is necessary (possibly speculatively). This works in programs which alternate between serial and parallel portions. If the precomputation can be done during a parallel portion, then it can be thought of as “free” because it is off of the critical path.

Deferring

Act like one of my high schoolers: don’t do work now when it could be done earlier. This works great in late spring, when a teacher realizes they have assigned too much work for the year so they cancel an assignment that most students (not mine) have already completed. Deferring interacts with other techniques:

Similar to precomputing, deferring can move work off of the critical path

Deferring can enable batching by deferring work until a large batch has been accumulated

Relaxation

Compute a quick and dirty approximation to the right answer rather than the exactly right answer.

Contextualization

Make a generic component more efficient for a specific use case. For example, a library user could pass hints at runtime which gives the library more information to make tradeoffs. Or profile guided optimization to enable the compiler to make better decisions when compiling the generic library.

Hardware Specialization

Use a hardware accelerator, to avoid paying the Turing Tax.

Layering

Chip away at performance inefficiencies caused by the abstractions in a layered software architecture. For example, DPDK allows applications to bypass many networking abstractions.

SysGPT

After finishing section 4 of the paper, I felt pretty good. My ego happily agreed with a world view that says that serial performance optimization can be expressed in terms of 8 “basis vectors”, all of which I had extensive experience with.

And then came section 5.

The authors fine-tuned GPT-4o with a dataset derived from analyzing SOSP and OSDI papers. Each item in the dataset comprises a problem description, observations, and solutions (in terms of the 8 techniques described earlier). The authors made data and scripts available here. The fine-tuned model is called SysGPT.

Results

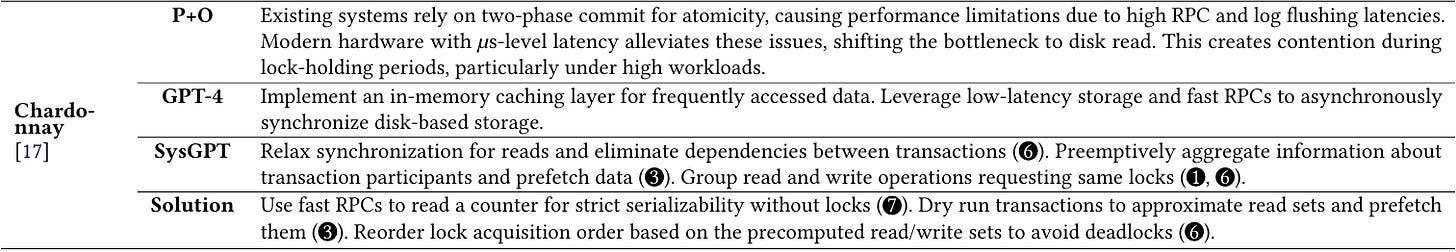

Table 4 shows example inputs (problems + observations), the output from GPT-4, the output from SysGPT, and the actual solution from a real-world paper. Here is an example:

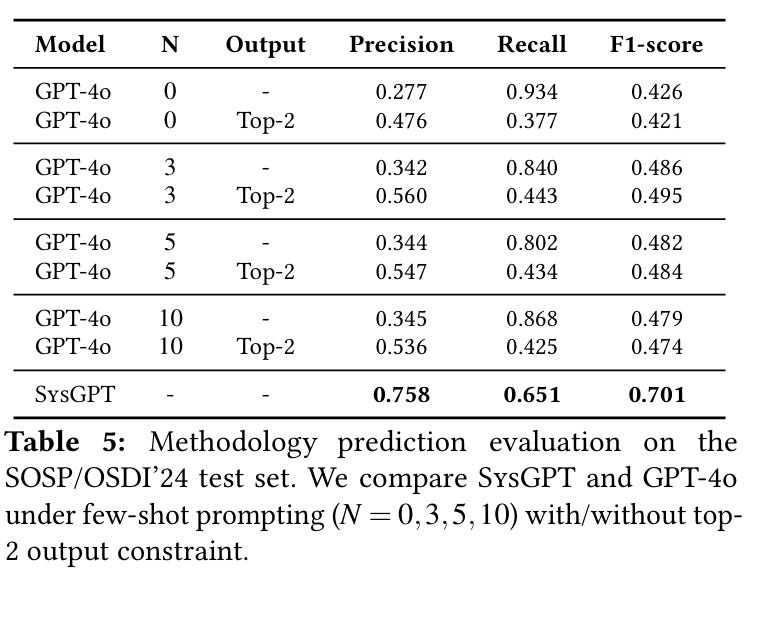

Table 5 has quantitative results, where each model is only asked to choose a subset of the 8 optimization strategies for a given problem (N represents the number of example problems given to the baseline GPT-4o model in a prompt):

Dangling Pointers

It would be interesting to extend these recipes to also include problems which can be parallelized.

This paper assumes that the problem and observations are produced by humans. It would be fascinating to see how much of that can be automated. For example, a model could have access to source code and profiling information, and output a set of observations about system performance before optimization.